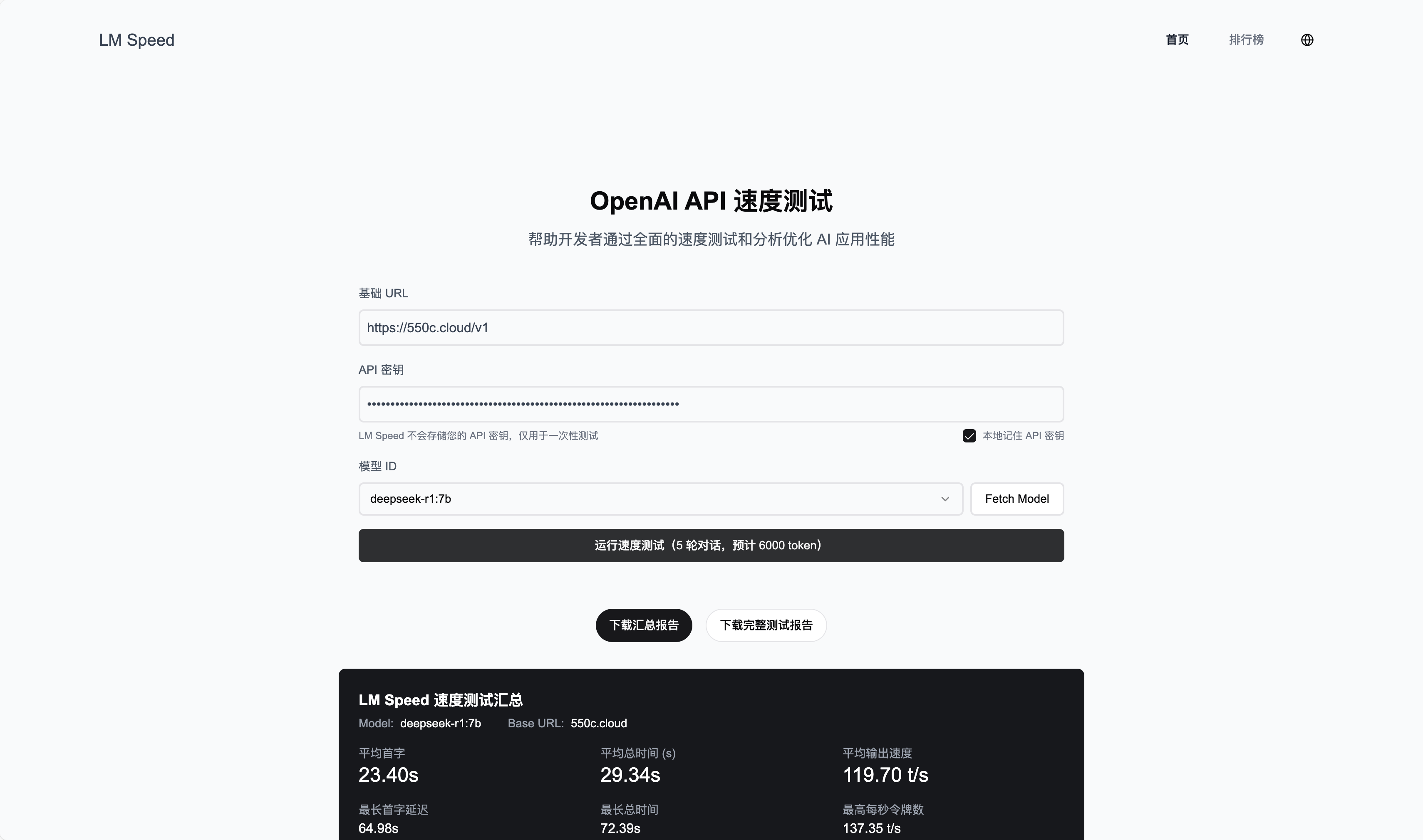

LM Speed - A Simple Large-Model Benchmarking & Analysis Tool

Portal: https://lmspeed.net

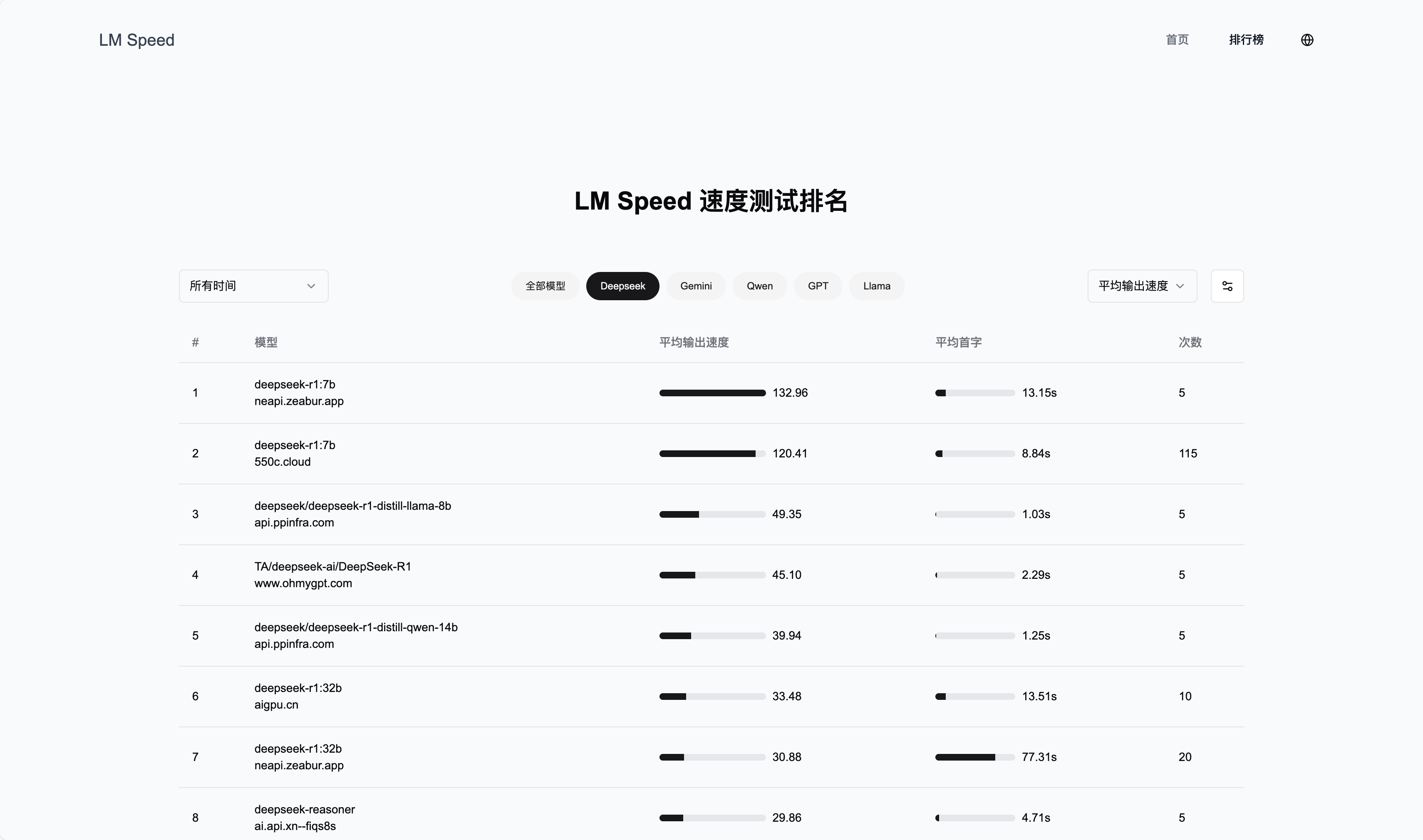

LM Speed provides accurate and reliable OpenAI API performance testing for AI application developers. With multi-dimensional, real-time analytics, it helps you quickly locate performance bottlenecks and optimize model invocation strategies. It also offers a clear leaderboard so you can compare and choose the most suitable models and providers.

Three core pain points solved

1. Opaque response quality

DeepSeek’s official API not usable? SiliconFlow too slow? When choosing LLM API services, developers often struggle to evaluate service quality. Performance varies widely across vendors, and objective benchmarks are rare. LM Speed provides standardized performance tests so you can assess real-world API performance before you commit to development.

2. Hard-to-monitor performance fluctuations

Not sure how fast an LLM API is? Not sure if the provider is reliable? Traditional tools often only measure response time, which doesn’t fully reflect real performance. LM Speed uses five consecutive stress rounds + dynamic streaming monitoring. It performs precise token accounting with tiktoken, analyzes response time, and automatically generates a 3D performance profile for max/min/avg—so you can fully understand an API’s behavior.

3. Test results are hard to retain

Performance data is often scattered and hard to accumulate or analyze systematically. LM Speed provides one-click test report generation, automatically consolidating key metrics and environment details. Reports can be exported and shared with your team. It also provides historical data storage and trend analysis to help teams build a complete performance evaluation system.

Key value for users

Data-driven decision support. Comprehensive performance analysis helps you make smarter API selection decisions:

- Real-time performance insight: Live monitoring of TPoS (tokens per second) gives a clear view of API performance. Multi-dimensional real-time charts make trends obvious.

- Full-spectrum evaluation: Covers first-token latency, response time, and other core metrics to provide a complete performance profile.

- Visual decision support: One-click professional test reports support real-time collaboration, saving an average of 80% decision time. Rich data visualizations help teams decide faster.