I Found the Fastest and Cheapest Way to Deploy FLUX.1 Kontext [dev]

FLUX.1 Kontext [dev] just dropped and immediately caused a stir in the open‑source community. This new model changes the game for image editing. Unlike traditional image generation tools, it understands context, so you can refine an image step by step like a conversation, instead of dumping a pile of prompts and hoping for the best.

From Keyword Matching to Context Understanding

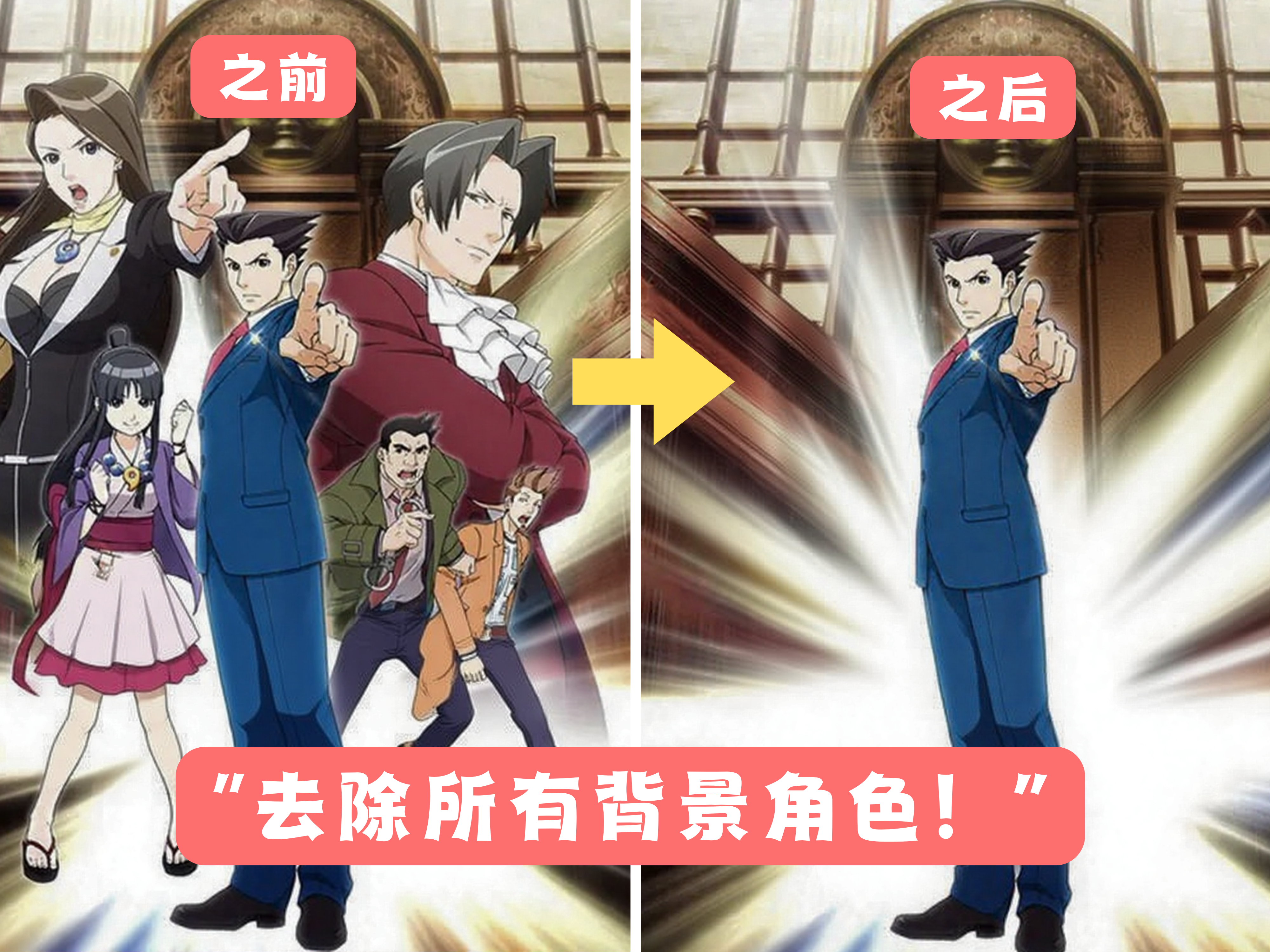

Traditional image models are basically doing keyword matching: you type “a red cat,” and it searches training data for similar combinations. FLUX.1 Kontext [dev] uses a multimodal flow‑matching architecture that processes both text instructions and image content, so it can actually understand what you want.

What does that mean? Suppose you want to change the background of a portrait. A traditional model might modify the person too, because it can’t tell what should stay. FLUX.1 Kontext [dev] understands “this is the person, the background is environment,” and only changes what you specify.

More importantly, it supports multi‑round iterative editing. You can say “change the background to a beach,” then say “make the water bluer,” and it remembers previous edits and keeps refining — instead of regenerating a brand‑new image from scratch.

30‑Second Deployment Guide

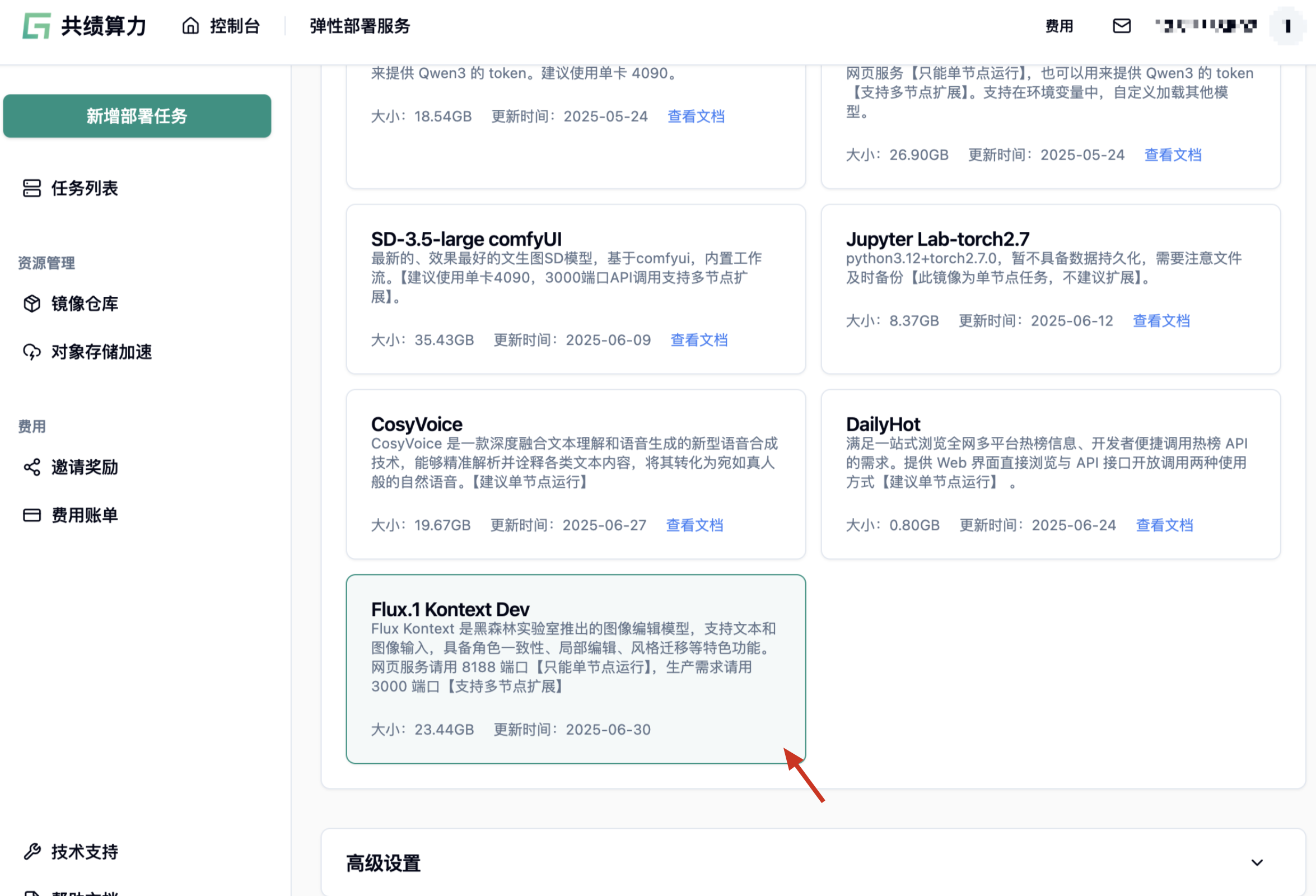

We’ve integrated FLUX.1 Kontext [dev] on the Gongji Compute platform, so you can try it right away.

- Visit https://console.suanli.cn/serverless/create or click “Get Started” on the website

- Choose a suitable GPU configuration

- Select “Flux.1 Kontext Dev” in service settings

- Click deploy

The whole process takes less than a minute. Once deployed, you can call the model via API.

Performance

In our tests, FLUX.1 Kontext [dev] runs 3–8x faster than similar models, largely thanks to its flow‑matching architecture. Traditional diffusion models require hundreds of denoising steps, while FLUX.1 Kontext [dev] uses a more efficient sampling algorithm that drastically cuts compute steps.

Speed doesn’t come at the cost of quality. In our tests, image detail preservation and style consistency are excellent, especially in complex scenes, where it maintains coordination between elements.

Cost

On Gongji Compute, FLUX.1 Kontext [dev] costs only 1.68 RMB per hour, depending on image resolution and complexity. Compared with overseas commercial APIs, that’s about 80% cheaper, and there’s no network latency.

Best‑Fit Scenarios

FLUX.1 Kontext [dev] is best for image editing tasks that require fine control and multi‑round iteration, such as:

- E‑commerce product images: quickly swap backgrounds, adjust lighting, tweak details without affecting the product itself

- Content creation: refine images for articles or videos based on themes

- Design prototyping: test different visual concepts in early design stages

Traditional image editors either require professional skills or only handle simple tasks. FLUX.1 Kontext [dev] fills this gap, enabling ordinary users to handle complex edits.

Conclusion

FLUX.1 Kontext [dev] signals a new era in image generation. The old “one‑shot generation” model is being replaced by conversational creation, dramatically lowering the barrier to professional image editing.

The model is still iterating fast. We expect more updates in the coming months — video editing support, more art styles, and better Chinese understanding.

Gongji Compute will keep pace with these updates so users can experience the latest features first. If you need image generation or editing, now is the best time to try FLUX.1 Kontext [dev].