We Built China’s First Serverless GPU Product

In 2025, AI applications exploded, showcasing the power of technological change. But as developers deep in the AI field, we repeatedly hit walls in real deployments—GPU rental costs were too high, traditional cloud supply couldn’t match volatile demand, and complex infrastructure management drained our energy. We knew these pain points weren’t just ours—they were industry-wide. So a thought formed: why not build a Serverless GPU platform that truly solves these problems? That’s how Gongji Compute was born. It’s not just a product—it’s our exploration and practice of what AI compute services should be, and we hope it opens a new era of compute for AI innovators.

Why we built Gongji Compute: structural dilemmas in the AI inference market

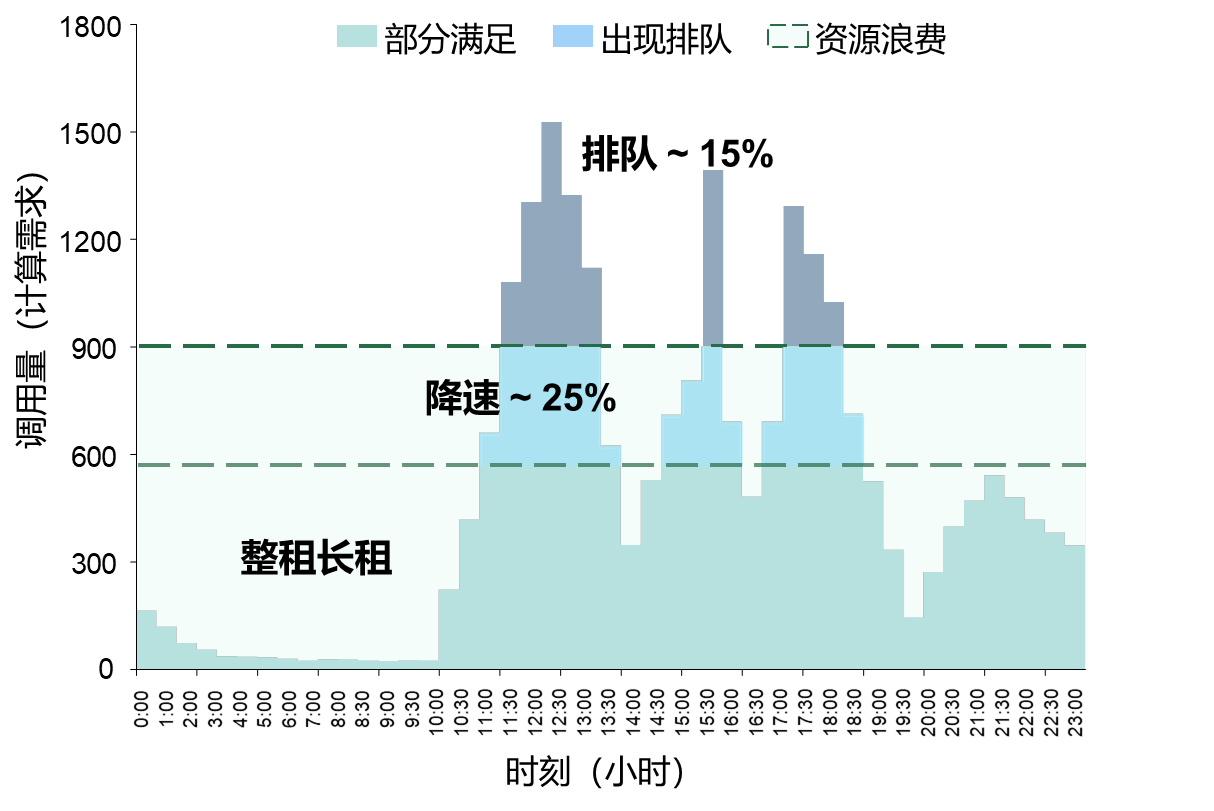

As we used compute, we felt the surge in inference demand. At the same time, we observed structural problems in China’s compute market. High inference costs are blocking AI adoption and innovation, which pushed us to rethink:

- Rigid service, weak elasticity: supply-demand conflicts hurt efficiency and UX.

- Traditional model, growth-limiting: long-term leases and heavy fixed costs slow iteration.

- Complex management & low efficiency: infrastructure management consumes engineering time.

- Resource mismatch: idle capacity exists alongside GPU shortages.

These form the “elasticity, stability, low cost” impossible triangle. Most cloud offerings fall into three types: long-term lease (low cost & stable), on-demand (high cost & stable), and spot instances (low cost & elastic). None solve all three.

Traditional GPU leases don’t match volatile inference demand, leading to high idle costs or service interruptions—the core problem we set out to solve.

Figure: The conflict between rigid supply and elastic demand directly impacts AI costs and user experience.

To resolve this, we turned to Serverless computing. We believe pay‑as‑you‑go, auto‑scaling, and simplified ops provide the ideal solution for AI inference.

Serverless GPU lets developers call GPU compute on demand without managing hardware—perfect for fluctuating inference workloads. We studied the global Serverless GPU market and saw platforms like RunPod already offering hourly billing and container deployments.

However, China has few platforms focused on Serverless GPU, and limited resources constrain local AI deployment. That’s the key reason we decided to build Gongji Compute.

Our solution: Gongji Compute Serverless GPU platform

Based on deep understanding of these pain points, we built Gongji Compute (suanli.cn), a Serverless GPU platform dedicated to AI inference. Our goal is to break the “impossible triangle” and truly deliver elasticity, stability, and low cost.

Core value we designed:

- Extreme elasticity: auto scale with traffic, millisecond-level metering, eliminating waste and idle cost.

- Ultra-simple deployment: Docker-based, five-step cloud launch, compatible with major platforms, with full technical support.

- Massive resources: nationwide compute aggregation, 10k‑GPU scale pool, high cost‑performance supply (e.g. RTX 4090 as low as ¥1.68/hour).

Our self-built idle compute scheduling platform aggregates resources across multiple compute providers, delivering Serverless pay‑as‑you‑go while solving “resource mismatch” through cross-platform integration.

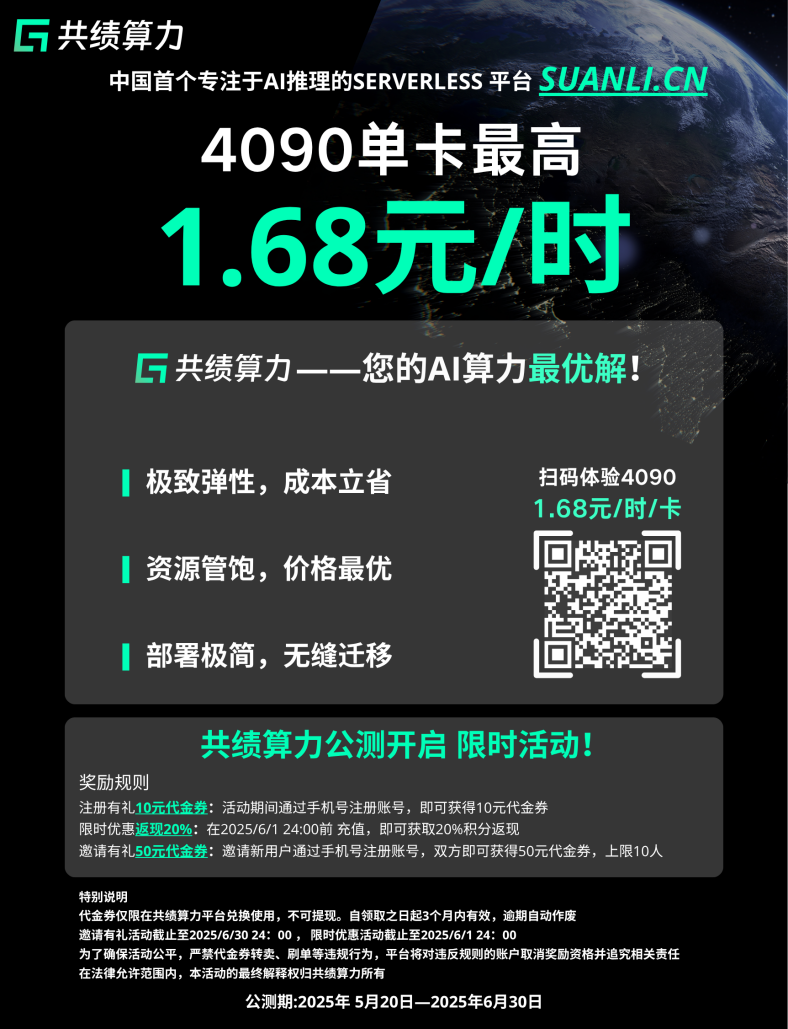

Limited-time offer: experience our Serverless GPU

NVIDIA RTX 4090 single‑card inference: just ¥1.68/hour!

From now until June 18, new users who register and top up for the first time get an extra 20% credits!

Invite friends to use our service: when they sign up with your invite code, both you and your friend get ¥50 credits each!

How to participate: during the event, log into your user dashboard at suanli.cn and top up online to automatically participate and receive bonuses. Final terms are subject to the official site.

Visit suanli.cn now and experience the new era of AI inference—where compute is no longer the bottleneck.